This is a presentation given by me and Marc Karbowiak at a local test meetup group called YVR Testing on the 2nd April 2014

PDF version of slides: BDD Intro

Intro

Disclaimers first, I am not an expert in Behaviour Driven Design (BDD), in fact I am just starting down this particular learning path. I have however been testing, and to a lesser extent, automating tests for many years. So I have learnt enough to know that this approach is a great one to try, as I can see how it will help to address many of the issues we experience. In particular it will clearly help prevent the types of issues that arise from misunderstandings, assumptions and ambiguity in our requirements.

Marc and I wanted to share some of our early experiences and those of other and better folks that precede us in learning BDD, as we feel strongly enough about this approach that we want to encourage and inspire others to learn and adopt BDD practices.

(slide 2) Setting the scene

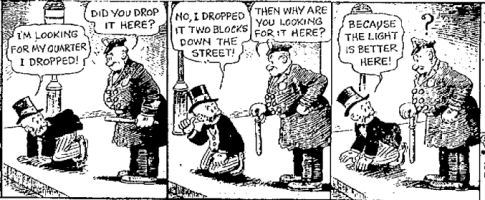

We have probably all experienced ambiguous requirements, which means we have probably all experienced problems in our products as a result.

Some simple examples;

- Product Manager (PM) & Interaction Designer (IxD) require a text box to have a 100 character limit.

- Test automator leaves the requirements discussion, goes back to his desk and writes tests that will drive the UI to write one character and assert that the UI shows character count of 1

- Developer leaves the same discussion, goes back to his desk and writes code which will show 100 characters and count down every time a character is entered.

- The test fails and then a discussion ensues to figure out which one understood the requirement appropriately.

I have experienced many of these types of situations, quite often where the PM is saying that neither the tester or the developer understood them correctly. In other words that both developer and tester misunderstood or mis-interpreted the requirements, and now both need to go and re-do or refactor their work to deliver what the PM really wanted.

(slide 3) Are you often testing at the end of the cycle?

Perhaps you are in a waterfall like development lifecycle, or a fragile lifecycle?

Do the testers know ahead of time what they will test? Did they work that out from either a written requirements document or a requirements discussion at the beginning?

Or are the testers working with the developers, product managers and in our case interaction designers on a regular (daily?) basis to ensure we are always on the same page and on track to deliver what is really required?

(slide 4) Are you in an agile like environment?

In which case do you all speak the same language? Do you have a domain specific language that is understood and used by all?

I have been in many requirements discussions, story kickoffs or similar where it really seems like we are talking different languages.

In my current role we have a lot of domain specific terms which are either overused, (used to mean more than one thing depending on context), or terms that mean different things to different people. We recently had a problem where one team used the term ‘system variables’ to mean a specific kind of data we store about a member of one of our insight communities, another team wanted to use the same term to refer to data we capture and store about a survey respondent’s computer system (e.g. browser & version, screen resolution and browser locale)

As a test why not ask 10 different people what a test plan is and see if you get 10 different answers.

(slide 5) Deadline approaching?

Does this mean you usually cut a few corners, rush your work, ignore some aspects of your process that perhaps you don’t think provide good value for the time they take?

So, why don’t we have time to do it right but we have time to do it twice?

(I forget where I first heard that phrase but it is a really powerful one for me)

We often cut corners or rush to meet a deadline, knowing really that we will have to come back and ‘fix it up’ or pay down some ‘technical debt’ later. And of course the cost of that will be higher than the cost of doing it the first time round.

(slide 6) A well known illustration of;

a) What the senior developer/designed designed

b) What got delivered

c) How it was installed at the customer site

d) What the customer really wanted

This is typically the result of some of the ways we have been working and the approaches we take.

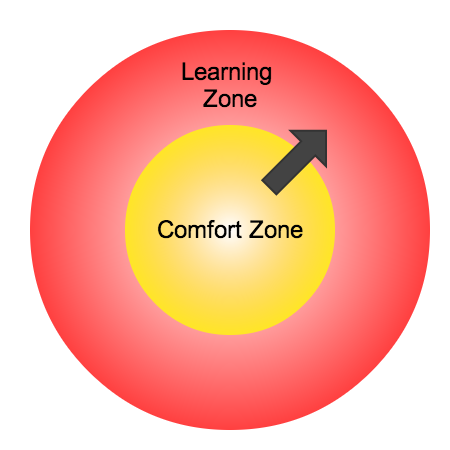

(slide 7) So how can we be better?

We can adopt the test first approach – combine the red-green-refactor pattern of TDD with behaviours to get BDD

Defining a test first and then writing the code to pass that test provides many benefits;

- Only write the code needed to pass the test (no waste)

- Ensures code is testable – cannot pass test otherwise – requires observability etc

- Test effectively documents the code

- Safety net – test is added to CI system ensuring we never regress this code (if we do the test fails) – future code refactoring is done with confidence as we will know, as quickly as it takes to run these tests, if we have made a mistake

(slide 8) Where do I start?

Another way to describe the test first pattern is the Acceptance Test Driven Development approach.

This fantastic diagram is borrowed from (add refs and links)

The fours Ds

Follows the TDD red green refactor cycle as shown in the middle, i.e. this is iterative

(slide 9) Discuss

Ensure you have representation from all of the key roles to discuss the story or item of work. Typically we refer to the 3 amigos – Product Management (or Business Analyst or if possible the customer), Development and QA. At Vision Critical we also often have a 4th amigo – an interaction designer (IxD)

The discussion needs to unify the language, ensuring we are all discussing the same thing and have common and shared understanding

For example ensure we don’t mix development terms with product or customer terms, try to define a domain specific language (DSL) that we can all share and understand.

(slide 10) Distill

Discussion should then produce examples of the behaviour you want from the product or system. These examples are effectively how you will test you have developed what was really needed.

Use a ubiquitous language and structure to define these – Given – When – Then

Facilitates clear communication as well as structure that is easy to read and simple to follow

(slide 11) Develop

First develop the automation that asserts the behaviours (automate the tests first)

Then develop the code (production code) to pass those tests

(slide 12) Demo

Demonstrate the working code using the automated tests

Review the behaviour specifications with the customer or product manager

Add the tests to your CI system (keep running the tests to ensure fast feedback and a consistent safety net of tests)

Time to celebrate and perhaps retrospect on the story and capture anything you learnt and ideas for improvement so that you can apply all to the next story

(slide 13) Repeat the cycle for all the stories, learning an improving as you go.

(slide 14) Results

Hopefully you will have delivered what the customer really wanted and gained some additional benefits;

- Executable specifications that can always be trusted to be true (otherwise your tests will be failing)

- Automated regression tests that provide a safety net and fast feedback on those regressions – try to have these tests run as frequently as possible

- Testable and thus maintainable code, not only can you look back at these tests in 6 months and know how the code works, but you also know the code is testable as it was written to pass tests

You will hopefully also feel proud of what you have achieved and will be recognised for that – if you are the only team doing this is will show!

(slide 15) What BDD is not

This all sounds great, so where do I get hold of this silver bullet or pink glittery unicorn?

Well BDD is not one of those, in fact it takes a lot of work to do it well, but it is worth it

(slide 16) How to get started?

There are a number of different BDD frameworks for the mainstream development languages, here are a few

- SpecFlow is for .Net

- Cucumber is mostly for Ruby

- JBehave is for Java

- Behat is for PHP

(slide 17) Gherkin anyone?

As well as being a pickled cucumber …

This provides the common and ubiquitous language that facilitates the simple and clear communication of behaviour

Here is an example of a feature, which contains a number of scenarios (tests for that feature)

The feature description here describes the feature and the context, in this case the problem the feature is trying to solve

(slide 18) Background

Background is a special keyword in Gherkin

In this case I am showing an example from the Cucumber Book that uses the background to setup the test preconditions – the Given for the following scenario(s)

(slide 19) Scenario

Here are the When and Then sections of this scenario

Very readable, understandable and clear

(slide 20) A question of style

A lot of folks (myself included) start writing scenarios in a similar way to how we would write test cases or code, by detailing all the steps that we need to execute in order to setup the product under test as well as perform the test and check the results.

This is an imperative style and it is not very readable, at least not when you are testing something more trivial than adding two whole numbers.

So we need to focus on a more declarative style, try to tell the story of the behaviours we want the product to have

This means hiding all of the details that are not relevant to the behaviour and keeping only the details that are important to the behaviour or the intent of the test

(slide 21) An imperative example

Lots of inconsequential detail here which means that the intent of the test is lost in the noise. We specify the email address and password but have to assume this means these are valid. Do we really need to know we clicked the login button? How does that help us understand if the product behaves correctly when we provide valid login credentials?

(slide 22) A declarative example

Hopefully you can all see this is much more readable and very clearly talks about what is important to the behaviour

This test is not about what makes a valid or invalid email address or password, it is about what happens when those are valid and the user is able to successfully login

(slide 23) DRY vs DAMP

Aim to tell a story rather than focusing on re-usability

An example here would be that we have steps like those in the imperative example;

Given I am on the login page

When I enter email as “[email protected]”

And password as “Password1”

And I click the login button

Because these steps detail how I login we can easily re-use the same steps throughout the scenarios to login before executing more steps that are designed to assert a new behaviour, for example;

Given I am on the login page

When I enter email as “[email protected]”

And password as “Password1”

And I click the login button

And I click the Start New Project button on the dashboard page

Then I should see a blank project

And I should be able to edit the project

Instead of re-using these steps this could have been written as;

Given I am on the dashboard page (it is not important for this behaviour to know what steps you took to login or what exact credentials you used)

When I start a new project

Then I should be able to edit my new project

(slide 24) Scenario Table Example

Using data tables to test with multiple values that will not read well if all written on one line of a Given, When or Then

These enhance readability by keeping the data clear but separate from the declarative and meaningful phrases

(slide 26) Scenario Outline Example

Sometimes you need to effectively test using the same steps but with a variety of test inputs, a scenario outline helps to avoid repeating steps each with different data values

In this case each row of the table is essentially one Given – When – Then scenario and will be executed sequentially

(slide 28) Hooks

These are really useful, they can simply call some code to setup or tear down your tests and are controlled using methods called Before and After

(slide 29) Tags

Use these to label your features and scenarios within features

You can then execute only those features or scenarios that are tagged a certain way

Or filter out tests with a different tag

We use tags to group tests by team, to run certain subsets of tests in a certain environment, and now we are trying to use tags as a way of recording and reporting test coverage by labelling features and scenarios by the code area that they cover

And that wraps it up for the presentation part.